Photo by Tomas Sobek

Introduction

Recently I was assigned the following task:

Our customers need to be able to create a zip file containing multiple files from Azure Storage and then download it. It needs to be FAST.

The only thing that matters to us is speed, we will not care about the file size of the generated zip file. So the plan is just to take all the files and put them into the zip archive without compressing them. This task will be very I/O dependent (CPU, network and storage).

Here's my plan:

- POST a list of file paths to a Azure Function (Http trigger)

- Create a queue message containing the file paths and put on a storage queue.

- Listen to said storage queue with another Azure function (Queue trigger).

- Stream each file from Azure Storage -> Add it to a Zip stream -> Stream it back to Azure storage.

We need to support very large files (100 GB+) so it's important that we don't max out the memory. The benefits of using streams here is that the memory consumption will be very low and stable.

Prerequisites

- Azure storage account (Standard V2)

- Azure storage queue ("create-zip-file")

- App Service Plan (we will use a dedicated one).

- SharpZipLib - library for working with zip files.

Code

Http portion

Let's start with the first function, the one responsible for validating and publishing messages to the storage queue.

Example body that we will POST to our function, all files exists in my Azure Storage account.

{

"containerName": "fileupload",

"filePaths": [

"8k-sample-movie.mp4",

"dog.jpg",

"dog2.jpg",

"ubuntu-18.04.5-desktop-amd64.iso",

"ubuntu-20.10-desktop-amd64.iso"]

}

Note that I've dumbed this down a little just to make it easier to follow. In production we will send in SAS URIs instead of file paths and container name.

public class CreateZipHttpFunction

{

[FunctionName("CreateZipHttpFunction")]

public ActionResult Run(

[HttpTrigger(AuthorizationLevel.Function, "POST", Route = "zip")] CreateZipFileInput input,

[Queue(Queues.CreateZipFile, Connection = "FilesStorageConnectionString")] ICollector<CreateZipFileMessage> queueCollector,

HttpRequest request)

{

var validationResults = new List<ValidationResult>();

if (!Validator.TryValidateObject(input, new ValidationContext(input), validationResults))

{

return new BadRequestObjectResult(new

{

errors = validationResults.Select(x => x.ErrorMessage)

});

}

queueCollector.Add(new CreateZipFileMessage

{

ContainerName = input.ContainerName,

FilePaths = input.FilePaths

});

return new AcceptedResult();

}

}

- Validate the input and return Bad Request if it's invalid

- Create a new CreateZipFileMessage and add it to the queue

Queue portion

public class CreateZipFileWorkerQueueFunction

{

private readonly ICreateZipFileCommand _createZipFileCommand;

private readonly ILogger<CreateZipFileWorkerQueueFunction> _logger;

public CreateZipFileWorkerQueueFunction(

ICreateZipFileCommand createZipFileCommand,

ILogger<CreateZipFileWorkerQueueFunction> logger)

{

_createZipFileCommand = createZipFileCommand ?? throw new ArgumentNullException(nameof(createZipFileCommand));

_logger = logger ?? throw new ArgumentNullException(nameof(logger));

}

[FunctionName("CreateZipFileWorkerQueueFunction")]

public async Task Run([QueueTrigger(

queueName: Queues.CreateZipFile,

Connection = "FilesStorageConnectionString")]

CreateZipFileMessage message,

string id,

CancellationToken cancellationToken)

{

_logger.LogInformation("Starting to process message {MessageId}", id);

await _createZipFileCommand.Execute(message.ContainerName, message.FilePaths, cancellationToken);

_logger.LogInformation("Processing of message {MessageId} done", id);

}

}

I don't do anything fancy here, I just execute the ICreateZipFileCommand. The reason for doing this is that I wan't to keep my functions free from business logic.

Zip portion

Somewhat inspired by Tip 141.

public class AzureBlobStorageCreateZipFileCommand : ICreateZipFileCommand

{

private readonly UploadProgressHandler _uploadProgressHandler;

private readonly ILogger<AzureBlobStorageCreateZipFileCommand> _logger;

private readonly string _storageConnectionString;

private readonly string _zipStorageConnectionString;

public AzureBlobStorageCreateZipFileCommand(

IConfiguration configuration,

UploadProgressHandler uploadProgressHandler,

ILogger<AzureBlobStorageCreateZipFileCommand> logger)

{

_uploadProgressHandler = uploadProgressHandler ?? throw new ArgumentNullException(nameof(uploadProgressHandler));

_logger = logger ?? throw new ArgumentNullException(nameof(logger));

_storageConnectionString = configuration.GetValue<string>("FilesStorageConnectionString") ?? throw new Exception("FilesStorageConnectionString was null");

_zipStorageConnectionString = configuration.GetValue<string>("ZipStorageConnectionString") ?? throw new Exception("ZipStorageConnectionString was null");

}

public async Task Execute(

string containerName,

IReadOnlyCollection<string> filePaths,

CancellationToken cancellationToken)

{

var zipFileName = $"{DateTime.UtcNow:yyyyMMddHHmmss}.{Guid.NewGuid().ToString().Substring(0, 4)}.zip";

var stopwatch = Stopwatch.StartNew();

try

{

using (var zipFileStream = await OpenZipFileStream(zipFileName, cancellationToken))

{

using (var zipFileOutputStream = CreateZipOutputStream(zipFileStream))

{

var level = 0;

_logger.LogInformation("Using Level {Level} compression", level);

zipFileOutputStream.SetLevel(level);

foreach (var filePath in filePaths)

{

var blockBlobClient = new BlockBlobClient(_storageConnectionString, containerName, filePath);

var properties = await blockBlobClient.GetPropertiesAsync(cancellationToken: cancellationToken);

var zipEntry = new ZipEntry(blockBlobClient.Name)

{

Size = properties.Value.ContentLength

};

zipFileOutputStream.PutNextEntry(zipEntry);

await blockBlobClient.DownloadToAsync(zipFileOutputStream, cancellationToken);

zipFileOutputStream.CloseEntry();

}

}

}

stopwatch.Stop();

_logger.LogInformation("[{ZipFileName}] DONE, took {ElapsedTime}",

zipFileName,

stopwatch.Elapsed);

}

catch (TaskCanceledException)

{

var blockBlobClient = new BlockBlobClient(_zipStorageConnectionString, "zips", zipFileName);

await blockBlobClient.DeleteIfExistsAsync();

throw;

}

}

private async Task<Stream> OpenZipFileStream(

string zipFilename,

CancellationToken cancellationToken)

{

var zipBlobClient = new BlockBlobClient(_zipStorageConnectionString, "zips", zipFilename);

return await zipBlobClient.OpenWriteAsync(true, options: new BlockBlobOpenWriteOptions

{

ProgressHandler = _uploadProgressHandler,

HttpHeaders = new BlobHttpHeaders

{

ContentType = "application/zip"

}

}, cancellationToken: cancellationToken);

}

private static ZipOutputStream CreateZipOutputStream(Stream zipFileStream)

{

return new ZipOutputStream(zipFileStream)

{

IsStreamOwner = false

};

}

}

- Create a filename for our new Zip file

- Create and open a writeable stream to Azure storage (for our new Zip file)

- Create a ZipOutputStream and pass the zip file stream to it

- Set the level to 0 (no compression, level 6 is the default level).

- Foreach file path, fetch some metadata for the file, create a ZipEntry and "download" the blob stream to the ZipOutputStream.

- Done!

I've also created a custom IProgress implementation that logs the progress of the zip creation every 30 seconds.

public class UploadProgressHandler : IProgress<long>

{

private bool _initialized;

private readonly Stopwatch _stopwatch;

private readonly ILogger<UploadProgressHandler> _logger;

private DateTime _lastProgressLogged;

public UploadProgressHandler(ILogger<UploadProgressHandler> logger)

{

_logger = logger ?? throw new ArgumentNullException(nameof(logger));

_lastProgressLogged = DateTime.UtcNow;

_stopwatch = new Stopwatch();

}

public void Report(long value)

{

if (!_initialized)

{

_initialized = true;

_lastProgressLogged = DateTime.UtcNow;

_stopwatch.Start();

}

if ((DateTime.UtcNow - _lastProgressLogged).TotalSeconds >= 30)

{

_lastProgressLogged = DateTime.UtcNow;

var megabytes = (value > 0 ? value : 1) / 1048576;

var seconds = _stopwatch.ElapsedMilliseconds / 1000d;

var averageUploadMbps = Math.Round((value / seconds) / 125000d, 2);

_logger.LogInformation("Progress: {UploadedMB}MB Average Upload: {AverageUpload}Mbps", megabytes, averageUploadMbps);

}

}

}

Performance

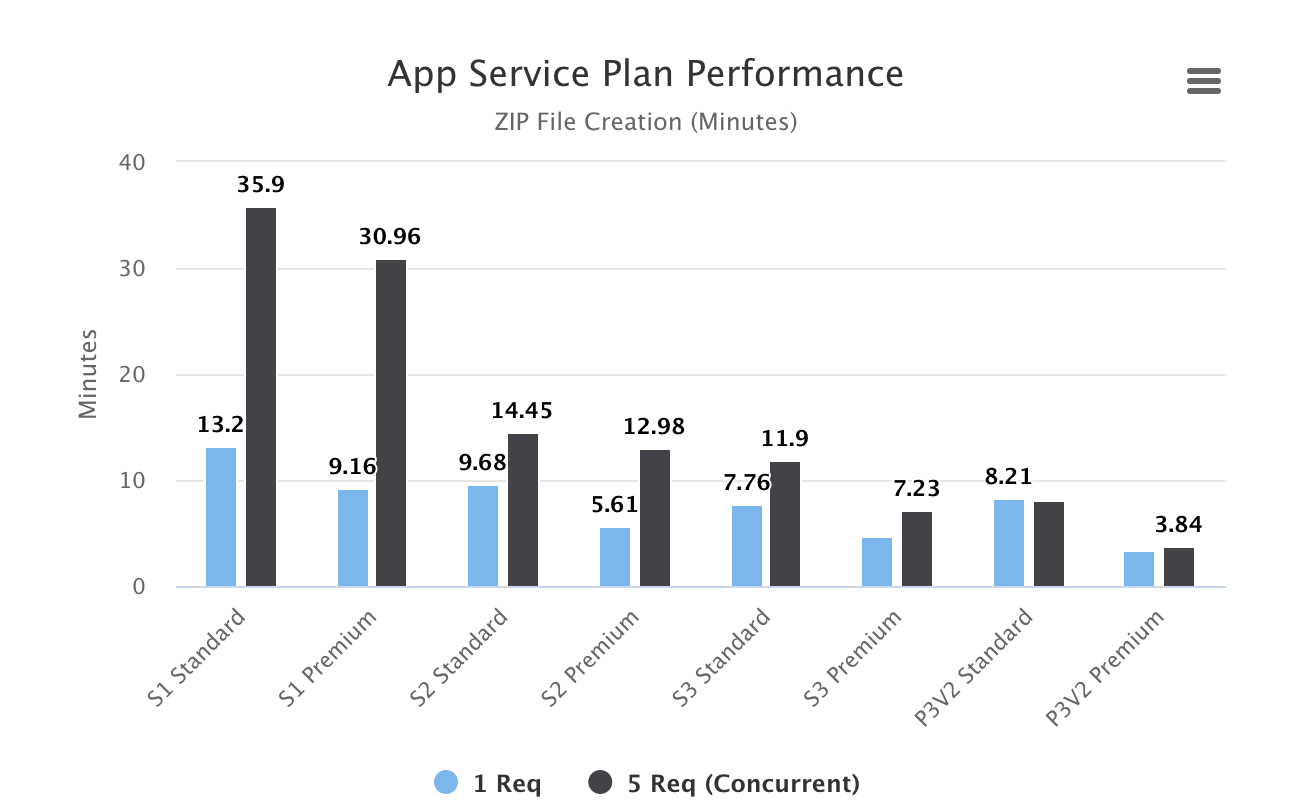

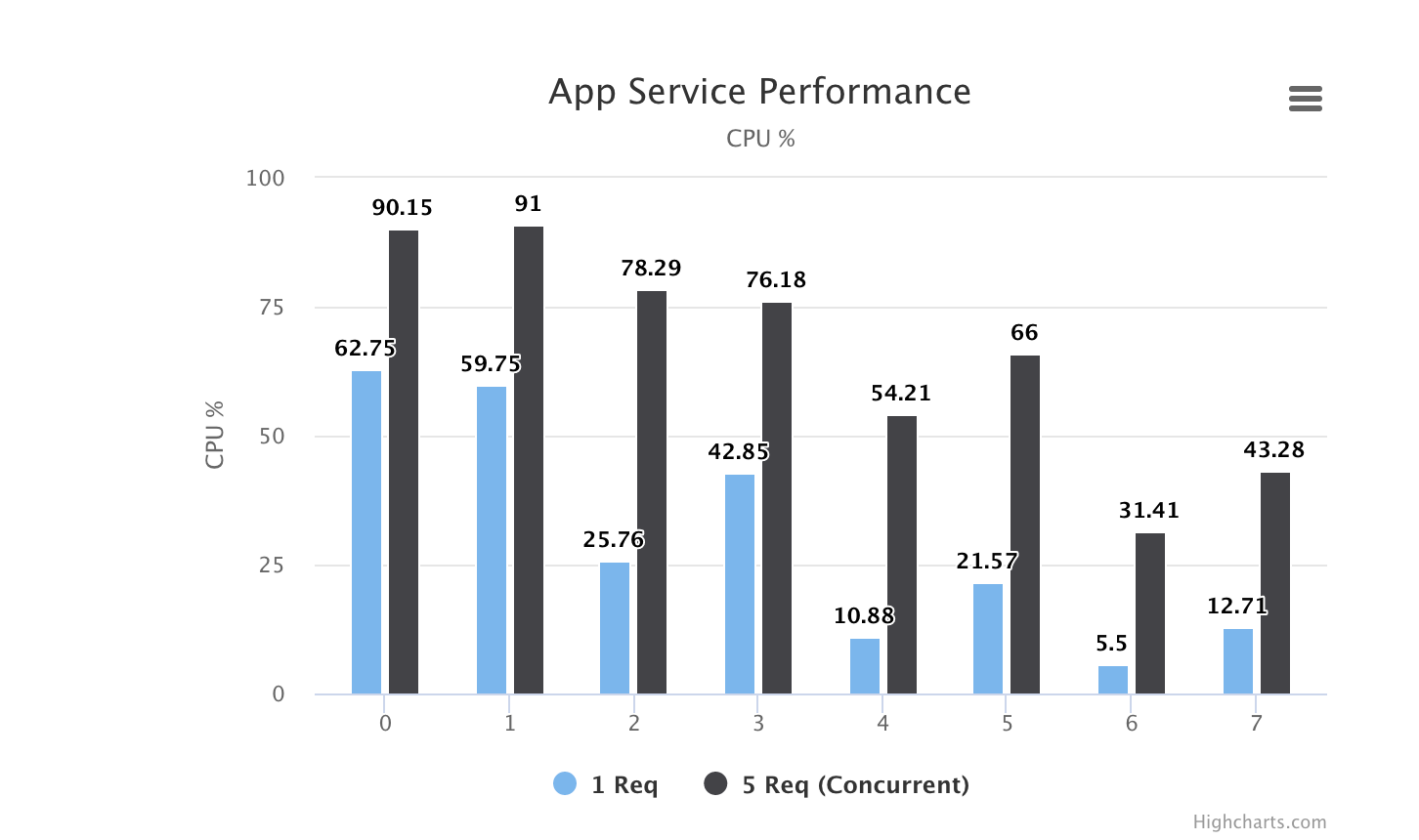

I've done some quick benchmarking with the following App Service plans:

- S1

- S2

- S3

- P3V2

There are four tests per App Service plan:

- 1 request -> Writing the Zip to Standard Blob storage

- 5 concurrent requests -> Writing the Zip to Standard Blob storage

- 1 request -> Writing the Zip to Premium Blob storage

- 5 concurrent requests -> Writing the Zip to Premium Blob storage

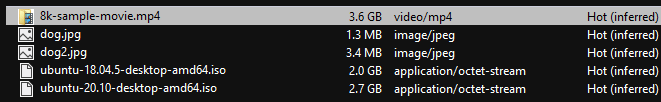

I've uploaded the following files to my storage account:

We will use all files in our tests, meaning that the total size of the files we are going to zip will be ~ 8.3 GB.

No surprises here really, the more CPU we have, the less is the difference between 1 and 5 concurrent requests. One thing to note here though is the difference between Standard and Premium storage, Premium storage significally lowers the processing time. I assume this has to do with HDD vs SSD storage.

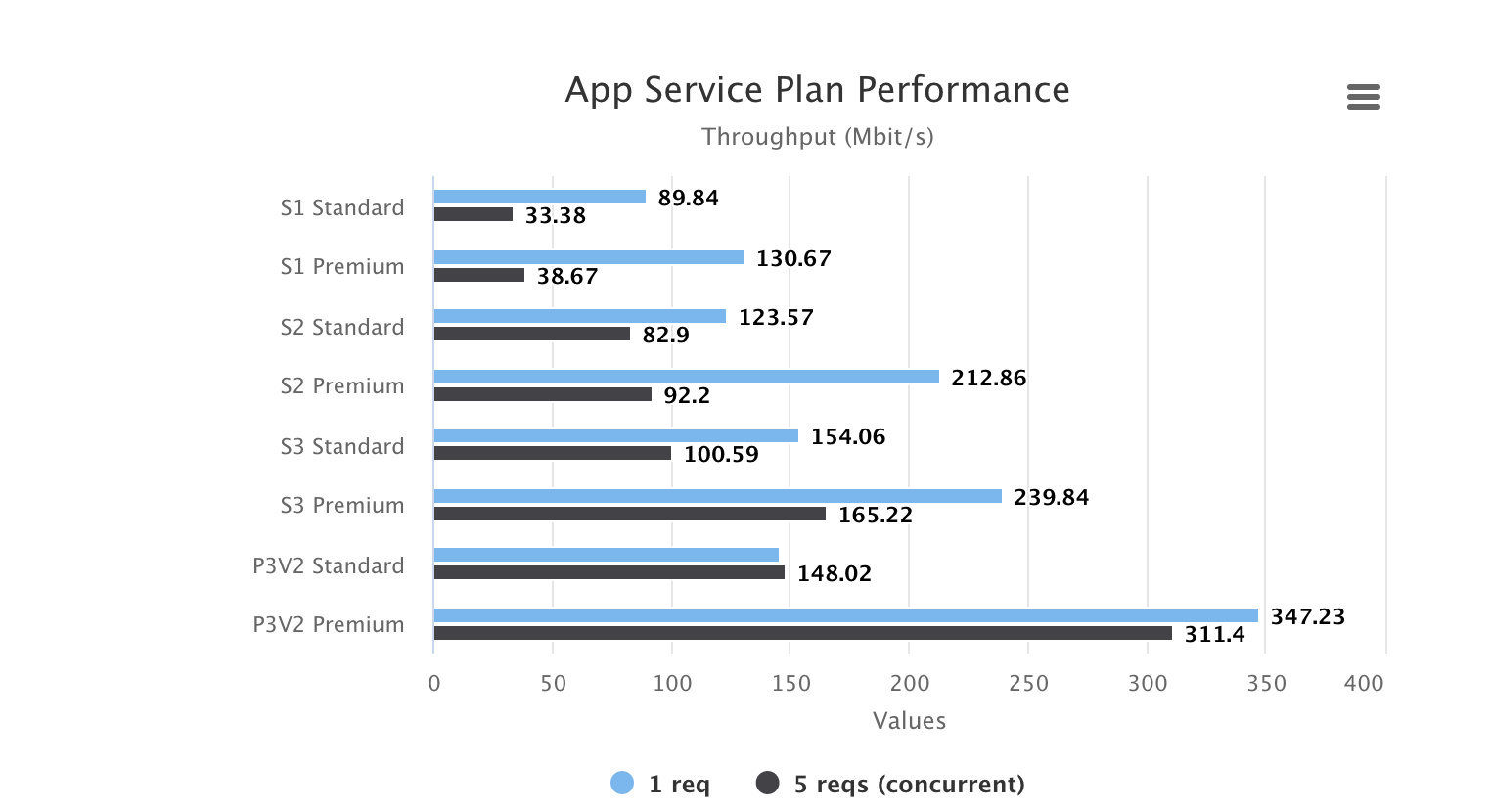

Same story here, more CPU == faster throughput (ofc :) ).

Noteworthy: the P3V2 App Service Plan together with Premium storage achieves ~ 1.5 Gbit/s throughput when sending 5 concurrent requests.

This numbers has been taken from the Azure Portal so they are kinda rough. We can see that both S1 and S2 are quite close to maxing out the CPU when processing 5 concurrent requests.

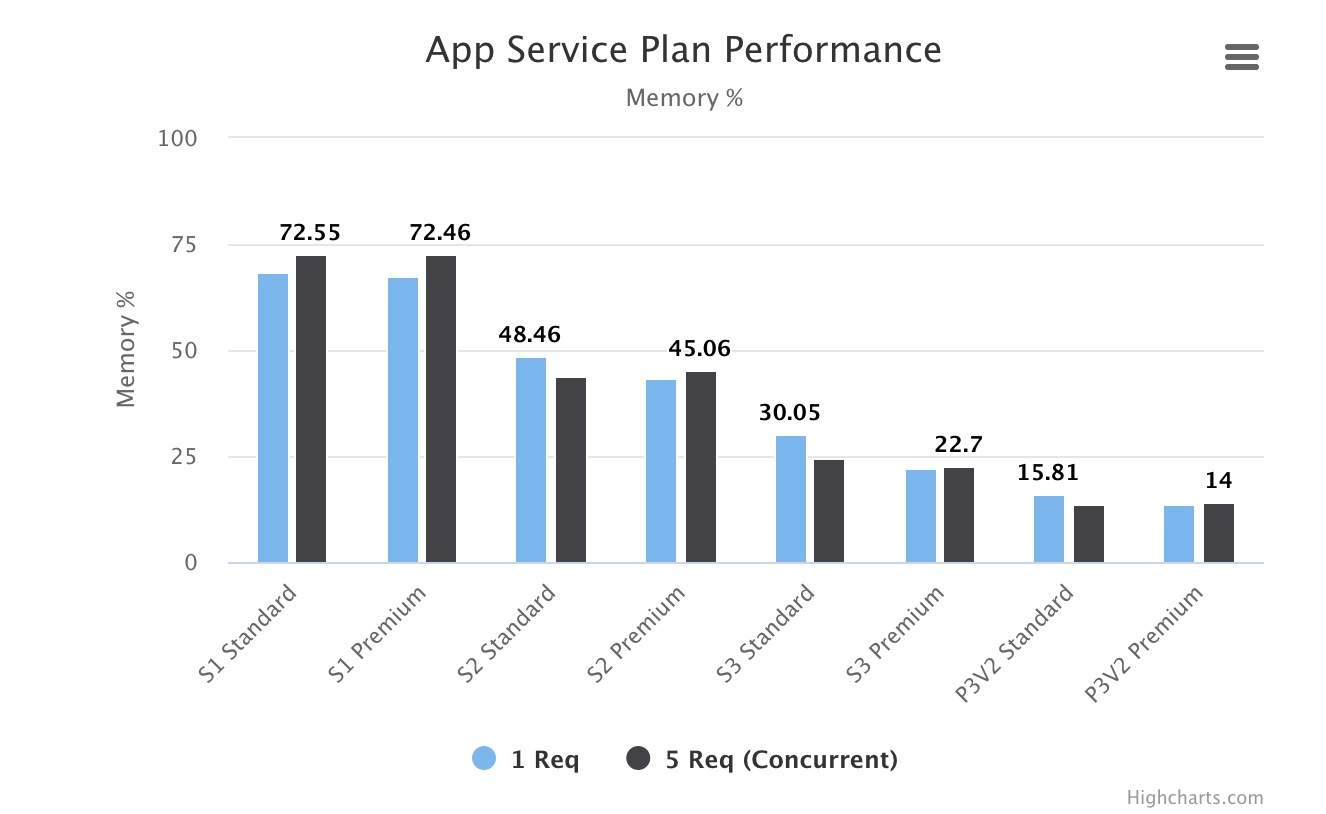

Here we can see that the memory usage is stable throughout, it doesn't matter if we process 1 or 5 requests, the consumption is roughly the same.

All code found in this post is available at GitHub.